In this blog article, I want to discuss launching application features out of sight in the web services landscape and introduce a small Java framework, a personal work, that implements a sandbox to test features in a production environment. While testing with real data may seem unsettling, there are valid scenarios where you may need additional assurance mechanisms before fully transitioning to the new feature. It is about Dark Launching and Shadow Testing.

‘Dark Launching’ refers to feature enablement in production behind the scenes, like ‘Shadow Testing’, which is the testing them in the background without making them generally available. It aims to activate new software features before the GA without interrupting the operations and being transparent to customers. During the testing phase, you are to monitor, for example, whether the new integration works seamlessly with existing components under realistic data and load. ‘Dark Launching’ and ‘Shadow Testing’ are indeed very similar in practice and differ only in their intended purpose — and occasionally, they are used interchangeably.

The main goal in ‘Dark Launching’ is to observe the behavior of the new application features under the realistic workload in the production environment. Clients are mostly unaware of the test running in the background. ‘Shadow Testing’, very like ‘Dark Launching’, is usually run on production systems against real customer requests, which are processed by the existing software components during Shadow Testing, while triggering the new components at the same time. The response from the new component is often not returned to the customer, and it is to simulate the integration of the new feature with the rest of the application. In case of an error, the output is logged out in a way that the engineering team can investigate, and the response from the existing integration is returned to the customer.

‘Shadow Testing’ can be considered as an orchestrated integration test using real data without the customer’s awareness. You might wonder why integration tests alone aren’t sufficient for testing new software components. Integration tests typically involve using test users and synthetic data to mimic production data. However, synthetic data may not capture the full range of variability present in real customer data within production systems. Consequently, this can lead to gaps in test coverage.

But what about canary release and active monitoring? Canary releases necessitate sophisticated roll-out orchestration and analysis of discrepancies between the old and new versions. They are designed to detect overall discrepancies and anomalies with the current release. However, if you need to take samples from the new integration and compare the results with the old one over an extended period, canary deployment might not be the ideal choice. This would require putting the deployment pipeline on hold, potentially delaying releases.

Meet the “Shade”

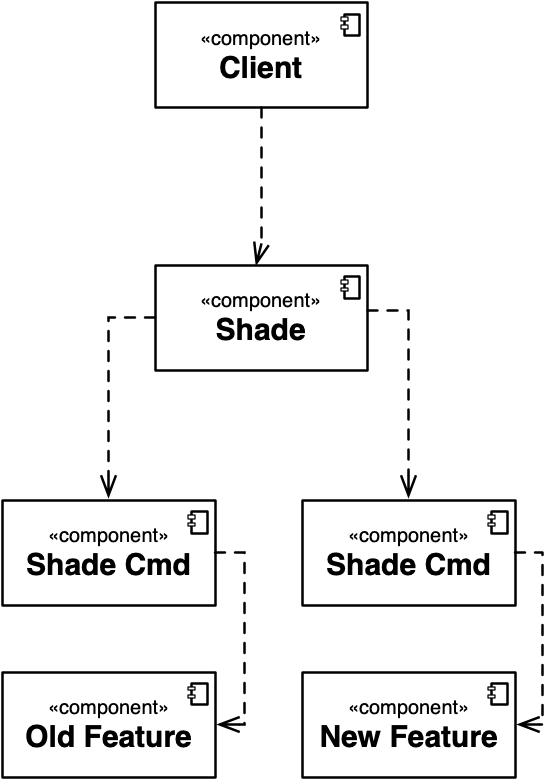

Shade is a small Java library that you can integrate into your application workflows. Essentially, it is a dispatcher implementation that activates both old and new features simultaneously. The resulting object from the old endpoint will be returned to the clients, while the response from the new feature is compared with the one from the old feature. If the results from the features are incompatible, the library notifies the feature developer by printing out a warning.

Figure 1 - Shade's component diagram

Shadow testing can be handy, for example, while switching between different versions of the same endpoint. Indeed, the backend teams, which I was the member of in the past, created such proxies between the connector layer and business logic to compare the results of two endpoints before moving to a new version of the integration. This approach assures the compatibility and reduces repeated roll-out-and-backs.